Abstract

Video

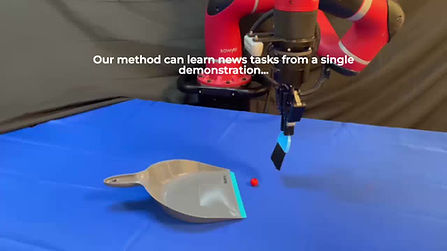

We propose DINOBot, a novel imitation learning framework for robot manipulation, which leverages the image-level and pixel-level capabilities of features extracted from Vision Transformers trained with DINO. When interacting with a novel object, DINOBot first uses these features to retrieve the most visually similar object experienced during human demonstrations, and then uses this object to align its end-effector with the novel object to enable effective interaction. Through a series of real-world experiments on everyday tasks, we show that exploiting both the image-level and pixel-level properties of vision foundation models enables unprecedented learning efficiency and generalisation.

Key Idea By leveraging both the pixel-level and image-level understanding abilities of Vision Foundation Models, DINOBot is able to learn many everyday tasks with a single demo, including tasks requiring precision or dexterity, generalising to many different objects and being robust to distractors and visual changes. Therefore, to obtain a general and versatile repertoire of manipulation abilities, only a handful of total demos are needed.

The use of Vision Foundation Models in Robotics

Robotics perception plays a fundamental role in manipulation. Understanding what the robot is seeing is crucial to compute and deploy a manipulation strategy. As robotics-centered data is scarce, researcher have often relied on large vision networks pretrained on web-scale dataset of images, called Vision Foundation Models, to extract information from observations. These networks learn to output effective and descriptive embeddings of their visual inputs, useful for many downstream tasks. The common paradigm in robotics it to use these networks and their embeddings as a backbone on top of which a policy network is finetuned.

Is this the optimal strategy? In this work, we demonstrate that there is an alternative, more effective solution, that fully leverages the pixel and image-level abilities of these pretrained vision networks.

To use the full potential of Vision Foundation Models, we recast the vision-based imitation learning problem as two computer vision problems: understanding what to do with an object and how via image-level retrieval from a dataset of demonstrations, and where to start the interaction via pixel-level alignment between the live image and a goal image. We use DINO, a family of state-of-the-art family of Vision Foundation Models, leading to the name of our method, DINOBot. DINOBot is able to learn many everyday tasks with a single demo, including tasks requiring precision or dexterity, generalising to many different objects and being robust to distractors and visual changes.

Manipulation via Retrieval, Alignment and Replay

As explored in one of our previous published work, implementing a manipulation pipeline via a sequence of retrieval, alignment and replay leads to unprecedented learning efficiency and generality. However, in our previous work we still strongly relied on collecting task-specific data (albeit in a self-supervised manner) and training visual alignment networks.

In DINOBot, we continue this line of research demonstrating that Vision Foundation Models can do much of the work and heavy lifting, if we decompose manipulation in the three phases expressed before.

Demo Recording

Let's see how the training and testing phases are connected. At training time, the user provides a set of demonstrations on how to accomplish a set of tasks. Noticeably, only a single demo per task and object class is needed (e.g. a single demo for grasping a mug is sufficient to then grasp new mugs).

For each demo, three datapoints are stored in a buffer: the bottleneck observation, that is the observation captured by the wrist-camera at the pose at which the demonstration started, called the bottleneck pose. The end-effector trajectory is stored entirely as a list of velocities in the end-effector frame. Finally, the task name is stored, such as insert or open. These three pieces are stored together in the buffer of demonstrations.

At the end of the training phase, the buffer has therefore been filled with a series of demonstrations. By needing a single demo per task+object class, few minutes are needed to require enough demonstrations for the robot to acquire a large repertoire of skills.

Now that we illustrated the simplicity of the training phase, whose role is to fill the buffer of demonstrations, we will now see how DINOBot operates at test time.

Retrieval Phase

During deployment, the user queries the robot to perform a task, like grasp or stack. The robot then record an observation of the environment from its wrist-camera, obtaining an image of the object to interact with.

The first phase is the retrieval phase. We leverage the image-level understanding ability of DINO-extracted descriptors to find the most similar demonstration in our buffer to guide the behaviour of the robot. In particular, we compare the extracted descriptor of the live observation to all the stored bottleneck observations, searching only among demos that solve the same task the user asked for.

Once we found the best match, we extract the bottleneck observation and its corresponding end-effector trajectory recorded during the demo.

Alignment Phase

We first use the bottleneck observation as a goal observation to guide the end-effector through a visual servoing phase. To do so, we enter the alignment phase. We now use the pixel-level descriptors extracted from DINO to find a set of common keypoints, i.e. a set of matching descriptors between the live and bottleneck observations, through a Best Buddies Nearest Neighbours matching algorithm. This process find a set of 2D keypoints correspondences, that are then projected to 3D given the RGBD wrist-camera.

Given the set of correspondences, we use SVD to find a rigid translation and rotation of the end-effector that aligns the two sets through squared error minimisation. By repeating this process several times, the end-effector aligns itself to the new object just as it was at the start of the demonstration received on the extracted object from the buffer.

Replay Phase

If this alignment is precise, we can therefore replay the demonstration velocities to interact with the new object just as we interacted with the demo object. On the right, we demonstrate how this process transfers a demo receivied on a can to a novel can. However, as we show in the paper, this method can generalise even beyond same class objects.

The testing phase therefore is a sequence of the retrieval, alignment and replay phases: as the first two leverage the DINO-extracted descriptors that encode semantic and geometric information about objects, the final part of manipulation can be achieved via simple trajectory replay.

Results

DINOBot enables robots to acquire a variety of everyday behaviours through a single demonstration per task, and effectively generalise to new objects. In the video on the right, we show how just 6 demos lead to successful interaction with a set of new both intra and inter-class objects. The robot autonomously decides how to interact with the object via retrieval, and executes the interaction via alignment and replay.

We tested DINOBot against a series of baselines on 15 tasks and more than 50 objects. Detailed results are reported in the barplot below, showing how DINOBot can surpass a series of baselines even with 10x fewer demos. Remarkably, we demonstrate that our explicit use of DINO features greatly surpasses finetuning a Behaviour Cloning policy on top of those same features.

Here we demonstrate a series of videos where the robot can be deployed immediately after a single demonstration.

Here we showcase how DINOBot can generalise intra and inter-class effectively after a single demonstration.

By comparing the DINO-extracted descriptors of the goal bottleneck image and the live image, and only extracting a set of discrete keypoints common in both, DINOBot effectively ignores distractors and changes of scene and focuses on the object seen during the demo. Below we show on the left a demo being recorded. Center and right show how we can not only deploy the skill on unseen objects, but also clutter the environment and visually change the scene with minimal decrease in performance.

Questions and Limitations

What about tasks that require complex feedback loops, like following the contour of an object? Our method cannot be applied to these tasks in its current form, but we still show that DINOBot solves a large set of everyday tasks with better learning efficiency than methods using closed-loop policies.

Why not use a third-person camera to observe more context? We use a wrist camera to exploit its properties. The use of a third-person camera to observe more context is indeed something we plan to work on in the future.

What if we want to provide multiple demonstrations of the same task for an object? Our proposed method is designed specifically so that only a single demonstration is needed per task and object. However, our framework can indeed receive multiple demonstrations across different objects, where this data can simply be added to the buffer for later retrieval. While possible, we did not see a concrete benefit in DINOBot for providing multiple demonstrations for the same task and object.

Is an open-loop skill sufficient to interact with unseen objects? Although open-loop skills cannot adapt to object with a very different shape, we empirically demonstrate that, with a correct selection of the best skill through retrieval and a precise alignment, these skills can correctly interact with a plethora of unseen objects.

How computationally-heavy is the framework? While a high-end GPU can run a forward pass of a base DINO-ViT in around 0.1 seconds, the correspondences matching can require several seconds. However, we believe that better computing hardware and parallel computing optimisations can drastically reduce this.

What are the main failure modes? Failures of our framework can happen at different stages: 1) wrong retrieval of a goal image (e.g. a very different object), 2) wrong correspondence matching, 3) noisy depth estimation from the wrist-camera (which affects the visual alignment phrase), 4) inability of the skill to successfully interact with the object (e.g. due to a fundamentally different shape of the object). We found that the most common source of failures was from the degrading depth estimation when the camera is very close to an object, or with objects that present a difficult shape (e.g. bottles seen from above, reflective objects). We believe that improvement in DINO-ViTs and in depth-camera technologies can significantly reduce all these failure modes.